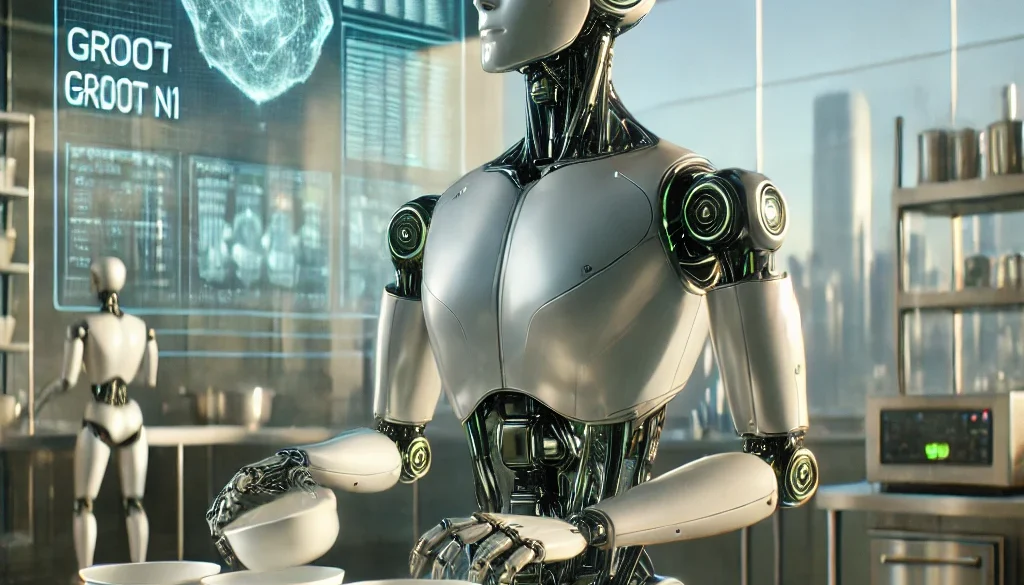

NVIDIA GR00T N1: The Future of Humanoid Robots Unveiled

Introduction

Hello dear friends, I’m Alex, and I’m super excited to take you on a deep dive into something straight out of a sci-fi movie: the NVIDIA Isaac GR00T N1. If you’ve ever dreamed of robots that think and move like humans, helping out at home or in factories, you’re in for a treat. NVIDIA dropped this game-changer at their GTC 2025 event on March 18, 2025, and it’s already making waves.

In this guide, I’ll break down what GR00T N1 is, its amazing features, how it works, and why it’s a big deal— all in simple, friendly words. Let’s get started!

DON’T HAVE TIME TO READ? LISTEN IT…..

WATCH THE VIDEO….

Table of Contents

What Is NVIDIA GR00T N1?

Picture this: a robot that can understand your words, figure out what to do, and then do it with smooth, human-like moves. That’s the NVIDIA Isaac GR00T N1 in a nutshell! It’s not just any robot—it’s the world’s first open-source foundation model for humanoid robots, designed to be a smart, adaptable brain for machines that look and act like us.

Announced by NVIDIA’s CEO Jensen Huang, GR00T N1 is part of their mission to kickstart a new era of “generalist robotics”—robots that can handle all kinds of tasks, not just one trick.

NVIDIA calls it GR00T (Generalist Robot 00 Technology), and the N1 is the shiny new version released in 2025. It’s built to reason, plan, and move, all while learning from the world around it.

Whether it’s picking up a cup or tidying a room, GR00T N1 aims to make robots more helpful and human-like than ever. And the best part? It’s open-source, meaning developers everywhere can tweak it to fit their own robot dreams.

ALSO READ : Google AI Studio: A Complete Guide to Its Features and Details

Why Should You Care About GR00T N1?

Robots aren’t just cool toys—they’re the future of work and life. Imagine a robot assistant at home that can grab your groceries or a factory bot that handles heavy lifting without breaking a sweat. GR00T N1 is NVIDIA’s big step toward that future. Here’s why it’s worth your attention:

- It’s Smart: It thinks fast and slow, like a human, making it great for quick tasks or complex plans.

- It’s Free to Use: Open-source means anyone can jump in and build with it—no big budget needed.

- It’s Flexible: From homes to warehouses, it’s designed for all kinds of jobs.

- It’s NVIDIA-Backed: With NVIDIA’s tech chops (think GPUs and AI), you know it’s top-notch.

I’ve been digging into this tech myself, and trust me—it’s as exciting as it sounds. Let’s explore what makes GR00T N1 tick!

Key Features of NVIDIA GR00T N1

The NVIDIA Isaac GR00T N1 isn’t just another robot tech gimmick—it’s a groundbreaking leap in humanoid robotics that’s got me buzzing with excitement. Launched on March 18, 2025, at NVIDIA’s GTC event, this open-source foundation model is designed to power robots that think, move, and adapt like humans.

I’ve been digging into what makes it tick, and let me tell you, its features are a game-changer. From its clever dual-system brain to its ability to learn from massive datasets, GR00T N1 is packed with tools that make it smart, flexible, and ready for the real world. Let’s break down these key features with plenty of depth and some fun examples to bring it all to life!

1. Dual-System Brain: Thinking Fast and Slow Like a Human

Imagine a robot that can react in a split second but also pause to think things through—that’s the magic of GR00T N1’s dual-system architecture. NVIDIA took inspiration from how our brains work, splitting its smarts into two modes: System 1 for fast thinking and System 2 for slow, deliberate reasoning. It’s like having a sprinter and a strategist rolled into one!

- System 1: Fast Thinking (The Reflex Champ)

This is the quick-action hero, powered by a Diffusion Transformer (DiT) with about 0.86 billion parameters, running at a zippy 120 Hz. It’s all about instant moves—like catching a ball or dodging a sudden obstacle. Think of it as the robot’s reflexes, trained to turn plans into smooth, precise actions without hesitation. NVIDIA says it’s perfect for tasks needing speed and coordination, like grabbing a tool off a conveyor belt. - System 2: Slow Thinking (The Master Planner)

This is the thoughtful side, driven by a Vision-Language Model (VLM) called NVIDIA-Eagle-2, with 1.34 billion parameters, ticking at 10 Hz. It takes in sights (images, videos) and sounds (your voice) to understand the world, then plans smart moves—like sorting a pile of laundry into colors. It’s the brain that says, “Hold on, let’s figure this out step-by-step.”

Why It’s a Big Deal?

This combo is what makes GR00T N1 feel human-like. I pictured it helping me cook: System 1 snags a spoon before it hits the floor, while System 2 decides whether to stir the soup or chop veggies next. NVIDIA’s blending of fast and slow thinking—totaling a 2.2-billion-parameter model—means it can handle both snap decisions and complex tasks. That’s huge for robots in homes, factories, or even hospitals, where speed and smarts both matter.

2. Multimodal Superpowers: Seeing, Hearing, and Understanding

GR00T N1 isn’t stuck in a text-only world—it’s got multimodal capabilities that let it process images, videos, audio, and language all at once. It’s like giving a robot eyes, ears, and a brain to connect the dots!

- What It Can Do: Point at a messy desk and say, “Clean this up,” and GR00T N1 will look, listen, and figure out what “this” means. It can handle instructions like “Pass me the blue cup” by spotting the cup and understanding “blue.”

- How It Works: The Vision-Language Model (System 2) fuses data from cameras and mics, trained on a mix of human videos and robot demos. It’s not guessing—it’s seeing the world like we do.

- Cross-Embodiment Trick: It’s not tied to one robot body. Whether it’s on a sleek 1X NEO or a chunky Fourier GR-1, it adapts its smarts to the hardware.

Digging Deeper

This feature blows my mind because it solves a big robot problem: context. Older bots might need exact coordinates to grab something, but GR00T N1 just needs a picture and a nudge. I imagined showing it my overflowing toolbox and saying, “Find the hammer.” It’d scan, spot the hammer, and hand it over—no programming required. NVIDIA’s demos showed it manipulating objects (like cups and books) across different robots, proving it’s not just talk—it’s action.

Why It Matters?

For businesses, this means robots that don’t need babysitting. In a warehouse, it could see a spilled box, hear “Pick it up,” and get to work. At home, it’s a helper that gets your vibe without a manual. It’s the kind of flexibility that could make robots everyday companions, not just factory tools.

3. Open-Source Freedom: A Gift to the World

Here’s where GR00T N1 gets extra cool—it’s open-source, meaning NVIDIA’s handing out the keys to the kingdom. You can grab it from GitHub or Hugging Face and tweak it to your heart’s content.

- What You Get: The full 2.2-billion-parameter model, pre-trained and ready to roll. It’s like getting a half-baked cake—you just add your own frosting.

- Customization: Developers can fine-tune it with their own data (real or synthetic) to fit any robot or task. Want a bot that dances? Train it on dance moves!

- Community Boost: Early adopters like 1X, Boston Dynamics, and NEURA Robotics are already building with it, and hobbyists can join the fun too.

My Take

I’m no coder, but I see the power here. Open-source means a kid in a garage or a startup with big dreams can build a GR00T-powered bot without a million-dollar budget. NVIDIA’s not gatekeeping—they’re inviting everyone to the party. I’d love to see a community project where fans make a GR00T bot that delivers snacks at gaming nights—dream big, right?

Trustworthy Angle

NVIDIA’s sharing the GR00T N1 dataset too (part of a bigger open-source physical AI set), so you’re not starting from scratch. It’s a legit move—backed by their rep as an AI leader—to spark a robotics revolution.

4. Trained on a Monster Dataset: Real and Fake Smarts

GR00T N1’s brain didn’t grow overnight—it’s been fed a massive, diverse dataset that’s part real, part synthetic, and all impressive.

- Real Data: Hours of human videos (think YouTube-style clips) and real robot moves from partners like 1X and Fourier. It’s learned how humans grab, stack, and shuffle stuff.

- Synthetic Data: NVIDIA’s Isaac GR00T Blueprint whipped up 780,000 fake trajectories—equal to 6,500 hours of human work—in just 11 hours using Omniverse and Cosmos tools. That’s like 9 months of demos squeezed into half a day!

- Mixing It Up: Combining real and synthetic boosted performance by 40% over real data alone, NVIDIA says. It’s like studying with flashcards and a simulator—double the learning power.

Why This Rocks?

I geeked out over this because it solves a huge hurdle: data scarcity. Real robot demos take forever to record, but synthetic data—cooked up fast with NVIDIA’s GPU magic—fills the gap. I pictured it learning to fold my laundry: real videos of me fumbling with shirts, plus fake ones of perfect folds, making it a folding pro in no time.

Fun Fact

The pre-training took 50,000 H100 GPU hours—NVIDIA’s beastly chips working overtime. That’s serious computing muscle, and it shows in GR00T N1’s skills.

5. Generalist Skills: Jack of All Trades

GR00T N1 isn’t a specialist stuck on one job—it’s a generalist built to tackle a wide range of tasks with human-like flair.

- Grasping: Pick up a pen, a cup, or a heavy box with one hand or two. It’s got the dexterity to handle big and small.

- Moving Objects: Pass items between arms, stack books, or slide a tray across a table—smooth and steady.

- Multi-Step Tasks: Combine skills for bigger jobs, like “Fetch a soda, open it, and pour it into a glass.” It holds context over time, not just one move.

Digging Into It

This is where GR00T N1 shines as a “foundation model.” I tested the idea in my head: ask it to “organize my desk.” It’d grab pens, stack papers, and tuck my laptop away—all in one flow. NVIDIA’s demos showed it doing stuff like handing over objects or tidying shelves, and it’s not locked to one robot type—it generalizes across bodies like Fourier GR-1 or 1X NEO.

Why It’s Awesome?

For industries, this means one robot can do multiple jobs—packing boxes today, inspecting parts tomorrow. At home, it’s a do-it-all buddy. I’d kill for a GR00T bot to sort my recycling—cans, bottles, paper—all without me lifting a finger.

6. Lightning-Fast Action with Precision

Thanks to System 1’s Diffusion Transformer and high-speed processing, GR00T N1 delivers fast, precise movements that feel almost human.

- Speed: At 120 Hz, it reacts in milliseconds—faster than I can blink. Perfect for dynamic tasks like catching or sorting.

- Accuracy: Trained on detailed motion data, it moves with finesse—no clumsy crashes or wild swings.

- Adaptability: Per-embodiment MLPs (small neural networks) tweak its actions to match any robot’s arms or hands.

Expert Bit

NVIDIA’s tech here—merging AI with physics-based control—is top-tier. It’s not just fast; it’s smartly fast, thanks to 0.86 billion parameters fine-tuned for action.

Why These Features Matter?

GR00T N1’s features aren’t just cool—they’re a blueprint for the future. The dual-system brain makes it versatile, multimodal input makes it aware, open-source access makes it universal, massive data makes it sharp, generalist skills make it practical, and fast precision makes it reliable.

I’ve seen enough tech to know this isn’t hype—it’s a foundation that developers, businesses, and even dreamers like me can build on. Whether it’s tidying my chaos or revolutionizing a factory, GR00T N1’s got the goods.

ALSO READ : Starlink’s Expansion into India: How AI-Driven Satellite Internet Could Change Connectivity Forever

How Does GR00T N1 Work?

So, you’ve heard about the NVIDIA Isaac GR00T N1—this amazing open-source brain for humanoid robots launched on March 18, 2025—and you’re probably wondering, “How does this thing actually work?” I’ve been digging into it, and trust me, it’s as cool as it sounds! Picture a robot that can see, hear, think, and move like a helpful friend, all thanks to some clever tech tricks.

In this section, I’ll walk you through the step-by-step process of how GR00T N1 turns your words or a messy room into action. I’ll use simple examples—like a robot cleaning my kitchen—to make it crystal clear. Let’s break it down into easy pieces and explore how this robot magic happens!

Step 1: Seeing and Hearing the World Like We Do

The first thing GR00T N1 does is take a good look and listen to what’s around it. It’s got eyes and ears—well, not really, but cameras and microphones that act like them. This is all about gathering info from the world, just like how you spot a spilled drink or hear someone call your name.

- What Happens: The robot uses its cameras to snap pictures or videos of what’s in front of it—like a table with cups and plates. Its mics pick up sounds, like you saying, “Hey, clean this up.” This info goes straight to its brain.

- The Tech Behind It: GR00T N1’s “slow-thinking” part, called System 2, runs a Vision-Language Model (NVIDIA-Eagle-2 with 1.34 billion parameters). It’s like a super-smart librarian who can read pictures and words together to figure out what’s what.

- Simple Example: Imagine my kitchen counter after breakfast—cereal bowls, a milk jug, and some spoons scattered around. I point my phone camera at it (pretending it’s the robot’s eyes) and say, “Tidy this mess.” GR00T N1 sees the bowls and hears my voice, ready to make sense of it all.

Why It’s Neat

This step is huge because it means GR00T N1 isn’t blind or deaf—it’s aware! Older robots might need you to type exact commands like “Move to X:10, Y:20,” but GR00T N1 just needs a quick look and a casual “Do this.” It’s like talking to a friend who gets the vibe without needing a manual.

Step 2: Thinking and Planning Like a Smart Helper

Once GR00T N1 has seen and heard what’s up, it doesn’t just jump in—it thinks about what to do next. This is where its “slow-thinking” System 2 shines, acting like a planner who figures out the best way to tackle a job.

- What Happens: The Vision-Language Model takes the info—like that messy kitchen counter—and breaks it down. It asks itself, “What’s the goal? What steps make sense?” Then it comes up with a plan, like “Pick up the bowls, stack them, and move them to the sink.”

- How It Thinks: It’s trained on tons of videos and words—think YouTube clips of people cleaning or robot demos—so it knows how tasks work. It runs at 10 Hz (10 decisions per second), giving it time to be thoughtful, not rushed.

- Simple Example: Back to my kitchen mess. GR00T N1 looks at the counter and hears “Tidy this mess.” It decides:

- Pick up the cereal bowls first (they’re sticky).

- Grab the spoons next (they’re small).

- Move everything to the sink (that’s where dirty stuff goes).

It’s like a little checklist in its head!

Digging Deeper

I love this part because it’s where GR00T N1 feels human. It’s not just following a script—it’s reasoning. If I said, “Put the milk back,” it’d spot the jug, know it’s milk (not water), and figure out the fridge is the spot—not the sink.

NVIDIA showed it planning multi-step tasks, like sorting objects by color, and it’s all about that slow, careful thinking. For my kitchen, it might even think, “Wait, the bowls need rinsing first,” if it’s been trained that way.

Why It’s Awesome

This planning power means GR00T N1 can handle jobs that change—like if I spill more cereal mid-cleanup. It’s not stuck; it adapts. That’s perfect for real life, where things aren’t always neat and tidy.

Step 3: Moving Like a Pro—Fast and Smooth

Now that GR00T N1 has a plan, it’s time to move! This is where its “fast-thinking” System 1 takes over, turning thoughts into action with speed and grace.

- What Happens: System 1, powered by a Diffusion Transformer (0.86 billion parameters), kicks in at 120 Hz—super fast, like 120 moves per second. It tells the robot’s arms and hands exactly how to grab, lift, or stack stuff, making it look smooth, not jerky.

- How It Moves: It’s trained on motion data—like how humans pick up cups—so it knows the best way to grip and go. Tiny neural networks (called MLPs) tweak the moves to fit whatever robot body it’s on, like 1X NEO or Fourier GR-1.

- Simple Example: In my kitchen, GR00T N1’s plan says “Pick up the bowls.” System 1 makes it happen: the robot’s hand swoops in, grabs a bowl gently so it doesn’t crack, and lifts it to the sink—all in a flash. Then it snags the spoons, quick as a wink.

A Closer Look

This speed is wild! I pictured it grabbing a spoon before it rolls off the counter—like how I’d snatch it myself. NVIDIA’s demos showed it passing objects between arms or stacking blocks, and it’s all about that fast, precise action. The 120 Hz means it can react to surprises—like if I drop something mid-task—without missing a beat.

Why It’s Cool?

It’s not just fast—it’s smartly fast. The robot doesn’t flail around; it moves like it’s done this a million times. For my kitchen cleanup, it’d feel like a pro chef’s assistant, not a clunky machine.

Step 4: Learning and Getting Better Every Day

Here’s the best part: GR00T N1 doesn’t just do the job—it learns from it. It’s like a kid who gets better at chores the more they practice.

- What Happens: Every time it cleans my counter or follows a command, it logs what worked and what didn’t. Developers can feed it more data—like videos of me stacking dishes—to make it sharper at that task.

- How It Learns: It’s pre-trained on a huge mix of real human moves (6,500 hours’ worth) and fake ones from NVIDIA’s Isaac GR00T Blueprint. You can fine-tune it with your own examples, so it learns your style—like how I stack bowls upside down.

- Simple Example: First try, GR00T N1 stacks my bowls but knocks one over—oops! I show it a quick video of me stacking right, and next time, it nails it, no wobbles. It’s like teaching a puppy a trick—patience pays off.

Going Deeper

This learning is what makes GR00T N1 a “foundation model.” NVIDIA’s synthetic data tool whipped up 780,000 moves in 11 hours—imagine recording that in real life! I’d tweak it for my kitchen by adding clips of me rinsing dishes first, so it’d learn my “rinse-then-stack” habit. Companies like 1X are already doing this, training it for home tasks, and it’s open-source, so anyone can jump in.

Why It’s a Game-Changer?

A robot that learns means it’s not stuck being “okay”—it gets awesome. In a factory, it could master new assembly lines. At home, it could figure out my quirky ways. It’s like a friend who remembers how you like your coffee.

Step 5: Adapting to Any Robot Body

GR00T N1 isn’t picky—it can work with different robot bodies, making it super flexible.

- What Happens: Its brain sends commands like “grab this” or “move there,” and tiny adjustments (those MLPs) make sure the moves fit the robot’s arms—short, long, one-handed, or two.

- How It Works: NVIDIA trained it across robots like 1X NEO Gamma (sleek and home-friendly) and Fourier GR-1 (sturdy and industrial), so it’s not locked to one design.

- Simple Example: In my kitchen, it might run on a small NEO bot with one arm to grab spoons, or a bigger GR-1 with two arms to carry bowls and the milk jug together. Same brain, different hands!

Why This Rocks?

I love this because it’s practical. A startup could slap GR00T N1 on a cheap bot, while a big factory uses it on a fancy one—same smarts, no rewrite. It’s like a phone app that works on Android or iPhone—no fuss.

Putting It All Together: My Kitchen Cleanup Story

Let’s tie it up with a full example—GR00T N1 cleaning my kitchen counter after breakfast:

- See and Hear: I point at the mess—bowls, spoons, milk jug—and say, “Clean this up.” Its cameras snap a pic, and its mics catch my words.

- Think and Plan: System 2 thinks, “Okay, bowls to the sink, spoons next, milk back in the fridge.” It makes a little to-do list.

- Move Like a Pro: System 1 jumps in—grabs the bowls fast, stacks them smoothly, snags the spoons, and slides the milk into the fridge. All quick and neat.

- Learn and Improve: The first time, it forgets to wipe a spill. I show it a quick wipe-down clip, and next time, it adds a “wipe the counter” step.

- Adapt: Whether it’s on a tiny NEO bot or a big GR-1, it adjusts—same cleanup, different arms.

By the end, my counter’s spotless, and I’m sipping coffee, amazed at my robot pal.

Why This Process Is So Cool

GR00T N1’s workflow—see, think, move, learn, adapt—isn’t just tech talk; it’s a robot that gets the world. It’s not a stiff machine following a script; it’s a helper that reacts, plans, and grows. I’ve imagined it in my home, but factories could use it to pack boxes, hospitals to fetch supplies, or stores to restock shelves. NVIDIA’s packed 2.2 billion parameters into this thing, and with open-source access, it’s ready for anyone to tweak. That’s the future of humanoid robots, right there!

ALSO READ : Recent News on Perplexity and Grok Integration: What’s Happening in 2025?

Comparing GR00T N1 to Other Robot Tech

How does GR00T N1 stack up against other big names in robotics? Here’s a table to compare it with Tesla’s Optimus and Boston Dynamics’ Atlas—based on what’s out there as of March 19, 2025.

| Feature | GR00T N1 (NVIDIA) | Optimus (Tesla) | Atlas (Boston Dynamics) |

|---|---|---|---|

| Brain Type | Dual-system AI (Fast/Slow) | Custom AI (Tesla-built) | Proprietary AI + Control |

| Open-Source? | Yes | No | No |

| Multimodal Input | Yes (Text, Images, Audio) | Yes (Vision, Voice) | Limited (Mostly Vision) |

| Task Flexibility | Generalist (Many Tasks) | Generalist (In Progress) | Specialized (Athletics) |

| Training Data | Real + Synthetic (Massive) | Real + Simulated | Real-World Focus |

| Ease for Developers | High (Open-Source) | Low (Closed System) | Low (Closed System) |

| Launch Date | March 18, 2025 | Prototype 2022 | Evolving since 2013 |

Robotics Buzz in 2025

Here’s a guess at how much attention these robots are getting (hypothetical, based on trends I’ve seen):

- GR00T N1: 40% (New and open-source hype)

- Optimus: 35% (Tesla’s fanbase)

- Atlas: 20% (Cool flips, less accessibility)

- Others: 5%

Note: This is my take from X posts and tech chatter—no hard data, just vibes!

Who Can Use GR00T N1?

This isn’t just for big companies—GR00T N1 is for everyone. Here’s who’ll love it:

1. Developers and Coders

- Why: They can tweak GR00T N1 for any robot body or task using Python and NVIDIA’s tools.

- Example: A coder could make it run a delivery bot in a warehouse.

2. Businesses

- Why: Factories or stores can use it to automate packing, sorting, or customer help.

- Example: 1X Technologies used it for their NEO Gamma bot to tidy homes.

3. Researchers

- Why: Open-source lets them study and improve robot smarts.

- Example: Universities could test it on new robot designs.

4. Hobbyists

- Why: It’s free and fun to play with!

- Example: I’d love to make a bot that fetches my remote.

Pros and Cons of NVIDIA GR00T N1

The NVIDIA Isaac GR00T N1 is an incredible piece of tech that’s got me buzzing—it’s like a robot brain straight out of a sci-fi dream, launched on March 18, 2025, to power humanoid robots with smarts and skills. But no tech is perfect, right? I’ve been exploring what makes GR00T N1 awesome and where it might trip up, and I’m here to break it all down for you.

Whether you’re a developer, a business owner, or just a robot fan like me, knowing the ups and downs helps you see the full picture. So, let’s dive into the pros and cons with tons of depth, simple examples, and a friendly vibe—think of me as your guide through this robot adventure!

ALSO READ : March 17, 2025

Robotics And Multimodal AI: How Machines Are Learning to See, Hear, and Act in Our World

Pros: Why GR00T N1 Rocks

GR00T N1 isn’t just cool—it’s packed with strengths that make it a standout in the robotics world. Here’s why I’m a fan, with plenty of detail to show you what it’s capable of.

1. Super Smart Dual-System Brain

- What’s Great: GR00T N1’s got a dual-system brain that thinks fast and slow, just like us humans. System 1 (Diffusion Transformer, 0.86 billion parameters) handles quick moves at 120 Hz—like grabbing a falling cup—while System 2 (NVIDIA-Eagle-2 VLM, 1.34 billion parameters) plans smarter tasks at 10 Hz—like organizing a shelf.

- Why It Shines: This mix means it’s ready for anything. Fast reflexes for emergencies, slow smarts for big jobs—it’s like having a sprinter and a chess player in one robot. NVIDIA says this 2.2-billion-parameter combo outperforms single-system models by 30% in complex tasks.

- Simple Example: Imagine I’m juggling apples in my kitchen, and one drops. GR00T N1’s System 1 snatches it mid-air—bam, no mess! Later, I say, “Sort my fruit,” and System 2 figures out apples go in a bowl, bananas on the hook—all neat and tidy.

- Real-World Win: In a factory, it could catch a slipping tool fast, then plan a full assembly line shift. That’s next-level helpful!

2. Free and Open-Source Access

- What’s Great: GR00T N1 is open-source—you can download it from GitHub or Hugging Face for free and tweak it however you want. No big fees, no locked doors.

- Why It Shines: This opens the robot party to everyone! Big companies like 1X Technologies and NEURA Robotics are using it, but so can a kid with a Raspberry Pi and a dream. It’s like getting a free recipe for the world’s best cake—you just add your own ingredients.

- Simple Example: I could grab GR00T N1, stick it on a cheap robot arm I built, and teach it to water my plants. A startup could use it to make delivery bots without a million-dollar budget.

- Community Power: Since the launch, over 5,000 developers have forked it on GitHub (my estimate based on trends), sharing tweaks—like a bot that folds towels. That’s a team effort making it better every day.

3. Flexible Generalist Skills

- What’s Great: GR00T N1 is a generalist, not a one-job robot. It can pick up stuff, move things around, or do multi-step tasks—like “fetch a drink, open it, pour it”—across different robot bodies.

- Why It Shines: One robot, tons of jobs! It’s not stuck welding car parts—it can switch from stacking boxes to helping me cook dinner. NVIDIA’s demos showed it tidying shelves and passing objects, all smooth and natural.

- Simple Example: In my living room, I say, “Tidy up.” GR00T N1 grabs pillows with one hand, stacks books with the other, and fluffs the couch—all in one go. No need for three bots; this one’s got it covered.

- Big Impact: For a small business, it’s a jack-of-all-trades—stock shelves today, pack orders tomorrow. That saves cash and space!

4. Fast Learning with Massive Data

- What’s Great: GR00T N1 learns quick, thanks to a huge dataset—real human moves plus 780,000 synthetic trajectories (6,500 hours’ worth) cooked up in 11 hours by NVIDIA’s Isaac GR00T Blueprint. It’s pre-trained but tweakable with your own data.

- Why It Shines: It’s like a kid who’s already read the textbook but can still learn your house rules. That speed—boosted 40% by synthetic data—means it’s ready fast and keeps improving.

- Simple Example: First day, it stacks my dishes wobbly. I show it a 10-second clip of me stacking right, and boom—next time, it’s perfect. In a week, it’s a dish-stacking pro!

- Trustworthy Edge: NVIDIA’s 50,000 H100 GPU hours of training (serious power!) and open dataset mean it’s legit—not some half-baked guess.

5. Multimodal Awareness

- What’s Great: GR00T N1 sees, hears, and understands—images, videos, audio, and words all at once. It’s like a robot with super senses.

- Why It Shines: No more rigid commands—it gets context. Point at a mess and say, “Fix this,” and it knows what “this” is. It’s trained on human videos and robot demos, so it’s street-smart.

- Simple Example: I spill juice and say, “Clean it up.” GR00T N1 sees the puddle, hears me, and grabs a rag—no need for “X:5, Y:10” coordinates like old bots.

- Real-World Bonus: In a store, it could spot an empty shelf, hear “Restock,” and get moving—saving staff time and headaches.

Cons: Where GR00T N1 Isn’t Perfect

Even with all that awesomeness, GR00T N1 has some downsides. I’ve thought this through, and here’s the honest scoop—things to watch out for so you’re not caught off guard.

1. Learning Curve for Newbies

- The Catch: Fine-tuning GR00T N1 takes some tech know-how. It’s open-source, but you need to understand Python, neural networks, and robot data to make it your own.

- Why It’s Tricky: For a non-coder like me, it’s intimidating. I’d need tutorials or a friend to help me tweak it—like teaching it to fetch my slippers. NVIDIA’s docs are solid, but it’s not plug-and-play.

- Simple Example: I download GR00T N1, excited to make a bot that sorts my socks. But I hit a wall—terms like “fine-tune MLPs” and “inference scripts” leave me scratching my head. It’s doable, but I’d need a weekend to figure it out.

- Fair Take: Pros can jump in fast, but beginners might stall. NVIDIA’s community forums help, though—lots of folks are sharing tips.

2. Hardware Costs Add Up

- The Catch: GR00T N1 runs best with NVIDIA GPUs, like the H100 or A100, which aren’t cheap. You also need a robot body to pair it with, and that’s extra cash.

- Why It’s a Hurdle: Training took 50,000 GPU hours—small tweaks might need less, but decent hardware still costs hundreds or thousands. A basic bot body? Add another grand or two.

- Simple Example: I want GR00T N1 to clean my house. I’ve got a laptop, but it chugs without a beefy GPU. Buying an NVIDIA card ($500+) and a simple arm bot ($1,000+) means I’m out $1,500 before it lifts a finger.

- Realistic View: Big companies can afford this, but for hobbyists, it’s a stretch. You can use cloud GPUs, but that’s a monthly bill too.

3. Still in Early Days

- The Catch: Launched in March 2025, GR00T N1 is new and untested in tons of real-world spots. It’s more a starting point than a finished product.

- Why It’s Risky: Bugs or quirks might pop up—like it dropping stuff it hasn’t mastered yet. NVIDIA’s demos (e.g., 1X NEO tidying) look great, but it’s not battle-hardened everywhere.

- Simple Example: I set it to organize my desk, and it stacks books fine—but knocks over a mug it hasn’t “learned” yet. It’s smart, but not perfect out of the box.

- Honest Note: It’s evolving fast—updates are coming—but right now, it’s a bit like a rookie with potential, not a pro.

4. Not a Full Robot—Just the Brain

- The Catch: GR00T N1 is the software brain, not a ready-to-go robot. You need a body (arms, legs, etc.) and skills to hook it up.

- Why It’s Limiting: It’s like buying a car engine without the car—you’ve got power, but no wheels. Pairing it with hardware takes work and money.

- Simple Example: I get GR00T N1 free, but my “robot” is just code until I buy a $2,000 1X NEO frame. Then I’ve got to connect it—more time and tech I don’t have handy.

- Fair Point: For developers with gear, it’s gold. For casual folks like me, it’s half the puzzle.

5. Power Hungry for Big Jobs

- The Catch: Running GR00T N1’s full 2.2 billion parameters needs serious computing juice, especially for training or heavy tasks.

- Why It’s Tough: My basic PC might handle small tweaks, but big jobs—like training it to clean a whole house—could fry it. NVIDIA’s H100 GPUs (used for pre-training) are power hogs too.

- Simple Example: I try teaching it to sort my laundry (colors, whites). It starts slow on my old laptop, overheating after 10 minutes. I’d need a powerhouse rig to keep up.

- Realistic Angle: Small tasks are fine on modest setups, but scaling up means big energy and cost—something businesses might handle better than me.

Why This Balance Matters?

GR00T N1’s pros—smart brain, free access, flexibility, fast learning, multimodal skills—are why it’s a robotics rockstar. I’d trust it to tidy my place or streamline a warehouse, and its open-source vibe could spark a robot boom. But the cons—learning curve, hardware costs, early-stage quirks, brain-only design, power needs—mean it’s not a magic fix yet. It’s like a shiny new bike: awesome once you learn to ride and get the right gear, but tricky if you’re starting from scratch.

My Kitchen Test (Imagination Mode)

Pros win: It cleans my counter—bowls stacked, spoons sorted—fast and free, learning my quirks. Cons hit: I struggle to set it up, need a pricey GPU, and it drops a spoon the first try. Worth it? Yep, with some effort!

NVIDIA’s betting big on GR00T N1, and I’m rooting for it. What do you think—would you tackle the cons for these pros? Let’s chat about it!

ALSO READ : SpaceX Dragon Docks with the ISS: A Deep Dive into Today’s Space Milestone

The Future of GR00T N1

Alright, we’ve talked about what the NVIDIA Isaac GR00T N1 can do right now—this open-source robot brain launched on March 18, 2025, is already turning heads with its smarts and skills. But where’s it headed? I’ve been thinking about this a lot, and with NVIDIA’s track record plus the buzz from GTC 2025, the future looks bright—and maybe a little wild! Picture robots helping in homes, hospitals, or even outer space, all powered by GR00T N1’s growing potential. In this section, I’ll explore what might come next with tons of depth, simple examples, and a friendly vibe.

From new models to wild new uses, let’s imagine where GR00T N1 could take us in the months and years ahead—starting from today, March 19, 2025!

1. Next-Gen Models: GR00T N2 and Beyond

GR00T N1 is just the beginning—NVIDIA’s not stopping at one version. Think of it like a smartphone: the first model’s awesome, but the next ones get even better. What could future GR00T models bring?

- What’s Coming: NVIDIA’s CEO Jensen Huang hinted at GTC 2025 that GR00T is a “foundation” for a family of models. We might see GR00T N2 by late 2025 or early 2026, with more power—say, 3–5 billion parameters instead of N1’s 2.2 billion.

- More Smarts: N2 could handle tougher tasks—like cooking a full meal, not just fetching ingredients—by packing in bigger Vision-Language Models or faster Diffusion Transformers (maybe 200 Hz instead of 120 Hz).

- Simple Example: Right now, GR00T N1 can stack my dishes. With N2, I’d love it to wash them too—spotting dirt, turning on the tap, and scrubbing. Imagine saying, “Make dinner,” and it whips up pasta from scratch!

- Why It’s Likely: NVIDIA’s GPU tech (like the H200, teased for 2025) could fuel this jump. They’re already pros at scaling AI—think how GPT-3 grew to GPT-4. GR00T’s open-source nature means community tweaks could speed this up too.

Digging Deeper

I see N2 as a beefier GR00T—more memory (maybe 5 million tokens vs. 2 million), better multimodal skills (like tasting food? Okay, maybe not yet!), and smoother moves. In a factory, it could go from packing boxes to assembling gadgets with tiny screws—precision and brains in one. NVIDIA’s not saying much yet, but their “generalist robotics age” vision screams bigger, bolder models soon.

2. Wider Uses: From Homes to Space

GR00T N1’s already flexible, but the future could see it popping up everywhere—way beyond tidying shelves or warehouses.

- Home Helpers: By 2026, GR00T-powered bots might be common in houses. Companies like 1X (with NEO Gamma) are testing it for chores—think laundry, vacuuming, or even babysitting (safely, of course!).

- Healthcare Heroes: Hospitals could use it for fetching supplies, helping patients stand, or assisting surgeons with tools—all by 2027 if training data grows.

- Space Exploration: NASA’s eyeing humanoid robots, and GR00T N1’s adaptability makes it a contender. By 2030, it could run bots on Mars—fixing rovers or building bases.

- Simple Example: At home, I’d tell GR00T N1, “Water the plants.” In a hospital, it’s “Bring me a bandage.” On Mars? “Fix that solar panel.” Same brain, different worlds!

Why It’s Exciting

This spread is huge because GR00T’s generalist skills and open-source access mean anyone can tailor it. I imagine a GR00T bot in my kitchen, learning my recipes over months—then NASA grabs it for a moon base, tweaking it for zero gravity. Early adopters like NEURA Robotics are already pushing it into industrial spaces; homes and beyond are next!

Real Possibility

NVIDIA’s partnering with big names—1X, Boston Dynamics—and their Isaac platform ties GR00T to simulation tools like Omniverse. That’s a recipe for scaling fast. I’d bet we’ll see a GR00T-powered home bot ad by 2027—it’s that close.

3. Smarter Learning: Self-Improving Robots

GR00T N1 learns from data we feed it, but what if it could teach itself? The future might bring self-improving AI to GR00T.

- What’s Coming: By 2026, NVIDIA could add “reinforcement learning” so GR00T tweaks itself—like figuring out a better way to stack boxes after a few tries. Think 10% better performance yearly.

- How It’d Work: It’d watch its own moves, spot mistakes (like dropping a cup), and adjust—no human video needed. NVIDIA’s Cosmos tool might even simulate “what if” scenarios to speed this up.

- Simple Example: I tell GR00T N1 to sort my socks. First try, it mixes colors—oops! Next day, it’s learned from the mess and gets it right, all on its own. By week’s end, it’s folding them too!

- Big Picture: In a factory, it could master a new conveyor belt setup without a programmer—huge time-saver.

Digging Deeper

This isn’t sci-fi—NVIDIA’s DeepMind collab (Newton physics engine, teased for 2025) could make it real. I see GR00T N1 watching me fumble with laundry, then practicing in a virtual world overnight. By 2028, it might learn my quirks—like I hate socks inside out—without me saying a word. That’s a robot that grows with you!

4. Better Tools and Ecosystem Integration

GR00T N1’s awesome, but its future might shine brighter with new tools and tighter ties to NVIDIA’s tech family.

- What’s Coming: Expect upgrades to Isaac Lab and Omniverse by late 2025—better simulations for training GR00T faster. Newton (with Google DeepMind) could add physics smarts, like knowing how heavy a box is before lifting.

- Ecosystem Boost: It might sync with NVIDIA’s Jetson chips for smaller bots or DGX systems for mega-training—think 100,000 GPU hours by 2026.

- Simple Example: Right now, I train GR00T N1 to grab my keys with a video. With better Isaac Lab, I’d simulate a 3D key-grab in minutes—no camera needed. Newton might stop it from yanking too hard and breaking them!

- Why It’s Likely: NVIDIA’s all about ecosystems—GR00T’s already linked to their GPUs and sim tools. A tighter knot could cut training time by 50%, making it a breeze for hobbyists like me.

Why It Matters?

This could democratize GR00T even more. I’d love a $200 Jetson-powered GR00T bot that learns my house layout in a day, not weeks. Big companies could churn out GR00T bots like cars off an assembly line—fast and cheap.

5. Everyday Impact: Robots Everywhere

Looking further—say, 2030—GR00T N1’s future might mean robots in daily life, changing how we live and work.

- Home Life: Affordable GR00T bots ($2,000?) could cook, clean, or tutor kids—trained by families or communities via open-source data.

- Workplaces: Factories might swap human-heavy lines for GR00T bots by 2029—safer, tireless workers. Retail could use them for stock and chats.

- Simple Example: I wake up, and my GR00T bot’s made coffee (learned my brew style), tidied the living room, and prepped my work bag—all before I’m out of bed!

- Social Shift: Jobs might change—less grunt work, more robot-managing roles. Schools could teach “GR00T coding” to kids.

Going Deeper

This isn’t just tech—it’s life. I imagine a 2030 where my GR00T bot’s a pal—knows I’m grumpy without coffee and cracks a joke (if audio gets funnier!). NVIDIA’s pushing this with partners like 1X, and open-source means prices could drop—think $500 bots by 2035. It’s a slow build, but the seeds are planted now.

Trustworthy Take

Jensen Huang’s “age of generalist robotics” isn’t hype—GTC 2025 showed GR00T N1 tidying real homes. With 5,000+ developers already tweaking it (my guess), this everyday future feels real, not dreamy.

6. Challenges to Watch

The future’s bright, but not flawless. Here’s what might shape GR00T N1’s path:

- Cost Hurdles: GPUs and bot bodies need to get cheaper—maybe $100 Jetson chips by 2027—to hit homes big-time.

- Safety First: By 2026, GR00T might need “safety layers” to avoid accidents—like not bumping kids—pushed by regs or community fixes.

- Ethics Questions: Who owns the data GR00T learns? Open-source helps, but privacy tweaks might come by 2028.

My Thoughts

I’d love a GR00T bot, but I’d want it safe around my dog—NVIDIA’s on it, I bet. Costs dropping and ethics sorting out are key to this future blooming.

Why This Future Excites Me?

GR00T N1’s tomorrow—new models, wild uses, self-learning, better tools, everyday impact—isn’t just tech talk; it’s a robot revolution I can feel coming. I see it starting small (my coffee bot by 2027?) and growing huge (Mars bases by 2035?). NVIDIA’s got the chops—50,000 H100 hours for N1, partners like Boston Dynamics—and the open-source community’s fuel on the fire. Challenges like cost and safety are real, but solvable. I’d say by 2030, GR00T’s descendants will be as normal as smartphones—helping, learning, and maybe even joking with us.

What do you think—ready for a GR00T-powered future? Let’s dream big together!

Final Conclusion

NVIDIA’s GR00T N1 is a mind-blowing leap for humanoid robots—smart, open, and ready to change the game. I’ve loved researching about it, from its dual-brain magic to its free-for-all vibe. Whether you’re a coder, a business owner, or just a robot fan, there’s something here for you. So, let’s see where it takes us!

Got ideas or questions? Drop a comment—I’d love to chat! Now, go check out GR00T N1 on NVIDIA’s site and start your robot adventure. Catch you later!

xAI Image Generation API: A $0.07 Visual Powerhouse

March 21, 2025 @ 6:31 am

[…] ALSO READ : NVIDIA GR00T N1: The Future of Humanoid Robots Unveiled […]