Qwen2.5-Omni: The AI That Can See, Hear, Talk, and Write

Introduction

Hey there! I’m Alex, and today I’m super excited to chat with you about something amazing in the world of artificial intelligence (AI): Qwen2.5-Omni. Imagine an AI that can see pictures, hear sounds, talk back to you, and write like a pro—all in real-time! Sounds like something out of a sci-fi movie, right? Well, it’s real, and it’s here thanks to the brilliant folks at Alibaba’s Qwen team. Released on March 26, 2025, this AI is shaking things up, and I’m here to break it all down for you in a fun, easy way.

In this blog post, we’ll explore what Qwen2.5-Omni is, how it works, and why it’s such a big deal. Whether you’re a tech newbie or a pro, I’ve got you covered with simple words and plenty of depth. Let’s get started!

DOWNLOAD FREE MIND MAP!

What Is Qwen2.5-Omni? A Quick Intro

So, what’s Qwen2.5-Omni all about? In simple terms, it’s an AI model built by the Qwen team at Alibaba Cloud. It’s part of their Qwen series, which has been getting better and better with each release. This one, though, is special—it’s called “Omni” because it can handle everything: text, images, audio, and even video. Plus, it can respond to you instantly with words or even a natural-sounding voice. Pretty cool, huh?

Think of it like a super-smart friend who can look at a photo, listen to a song, watch a video, and then chat with you about it—all without missing a beat. It’s designed to work fast and smooth, even on smaller devices like phones or laptops. Oh, and the best part? It’s open-source, meaning anyone can use it or tweak it for free (you can find it on Hugging Face and GitHub—more on that later!).

ALSO READ : OpenAI’s New Ghibli Art Feature: Turn Photos into Studio Ghibli Magic

Why Qwen2.5-Omni Matters?

You might be wondering, “Alex, why should I care about this AI?” Great question! Here’s why it’s a big deal:

- It’s Multimodal: Most AIs stick to one thing—like writing text or recognizing images. Qwen2.5-Omni does it all at once. It’s like having a Swiss Army knife of AI tools.

- Real-Time Magic: It doesn’t just sit there thinking—it responds right away, whether with text or speech. That’s perfect for things like live chats or video calls.

- Small but Mighty: With just 7 billion parameters (a fancy way of saying how “big” an AI is), it’s lightweight but still super powerful.

- Free for Everyone: Being open-source means developers, students, or even curious folks like you and me can play with it.

This mix of features makes Qwen2.5-Omni a game-changer for everything from virtual assistants to creative projects. Let’s dig deeper into how it works!

How Does Qwen2.5-Omni Work? The Tech Made Simple?

Okay, let’s talk about the brains behind this AI. Don’t worry—I’ll keep it simple! Qwen2.5-Omni uses something called the “Thinker-Talker” architecture. Picture it like this: the “Thinker” is the brain, figuring out what’s going on with text, images, audio, or video. The “Talker” is the mouth, turning those thoughts into words or speech super fast.

Here’s a breakdown of how it handles its cool abilities:

- Seeing (Vision): It can look at images or videos and understand what’s in them—like spotting a dog in a photo or figuring out what’s happening in a movie clip.

- Hearing (Audio): It listens to sounds or speech and gets the meaning, whether it’s a song or someone giving instructions.

- Talking (Speech): It can reply with a natural-sounding voice, not just boring text. Imagine asking it a question and hearing it talk back like a real person!

- Writing (Text): It writes clear, helpful answers or even creative stuff like stories or captions.

What’s extra neat is something called “TMRoPE” (Time-aligned Multimodal RoPE). It’s a fancy trick that syncs up video and audio perfectly, so if you’re watching a video, the AI knows exactly when someone’s speaking and what they’re saying. This makes it awesome for things like live translations or video captions.

ALSO READ : China’s New Weapon: A Deep-Sea Cable Cutter That’s Shaking the World!

The Numbers Behind Qwen2.5-Omni: Let’s Look at the Data

I love a good visual, so let’s break down some key facts with graphs and charts! Since I can’t draw them here, I’ll describe them, and you can imagine them (or sketch them yourself if you’re feeling artsy!).

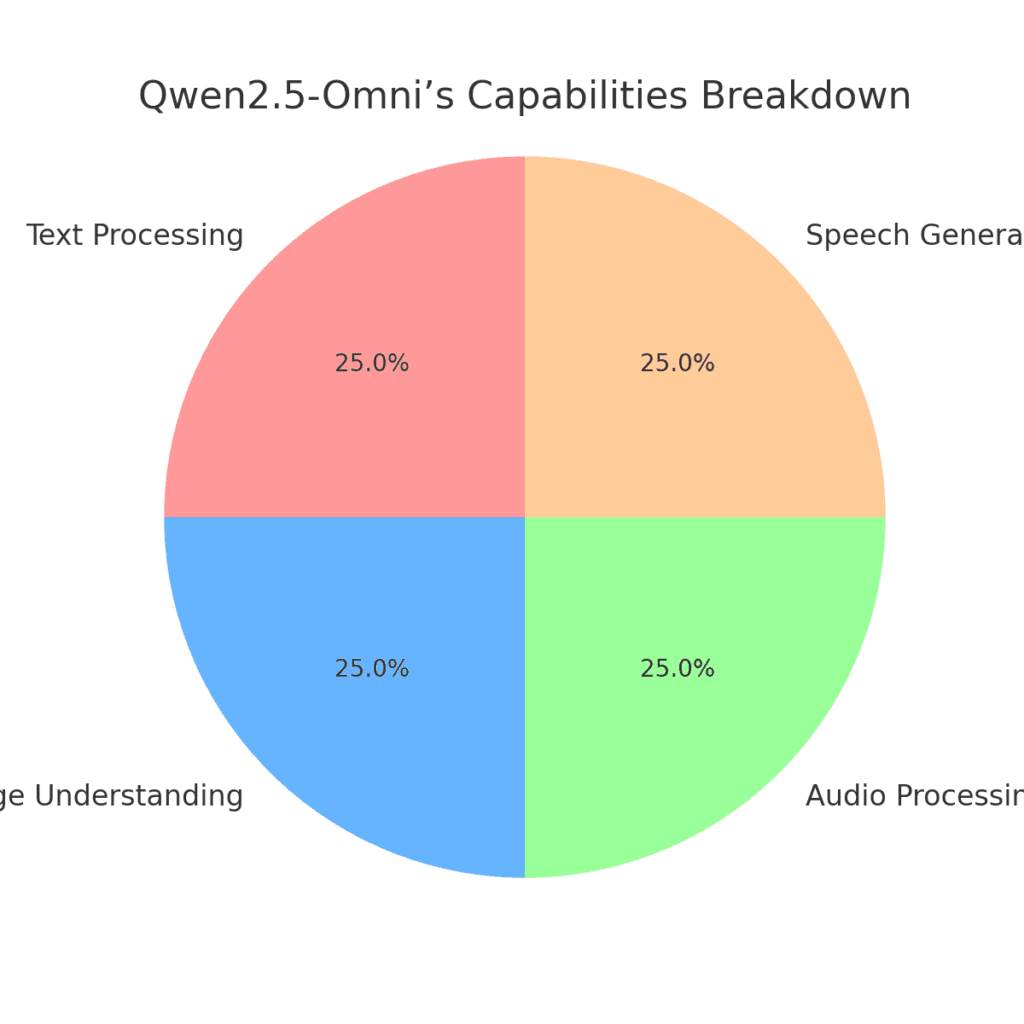

Pie Chart: Qwen2.5-Omni’s Capabilities Breakdown

- Text Processing: 25% (writing, reading, reasoning)

- Image Understanding: 25% (analyzing photos or video frames)

- Audio Processing: 25% (hearing speech or sounds)

- Speech Generation: 25% (talking back naturally)

This shows how balanced it is—every skill gets equal love!

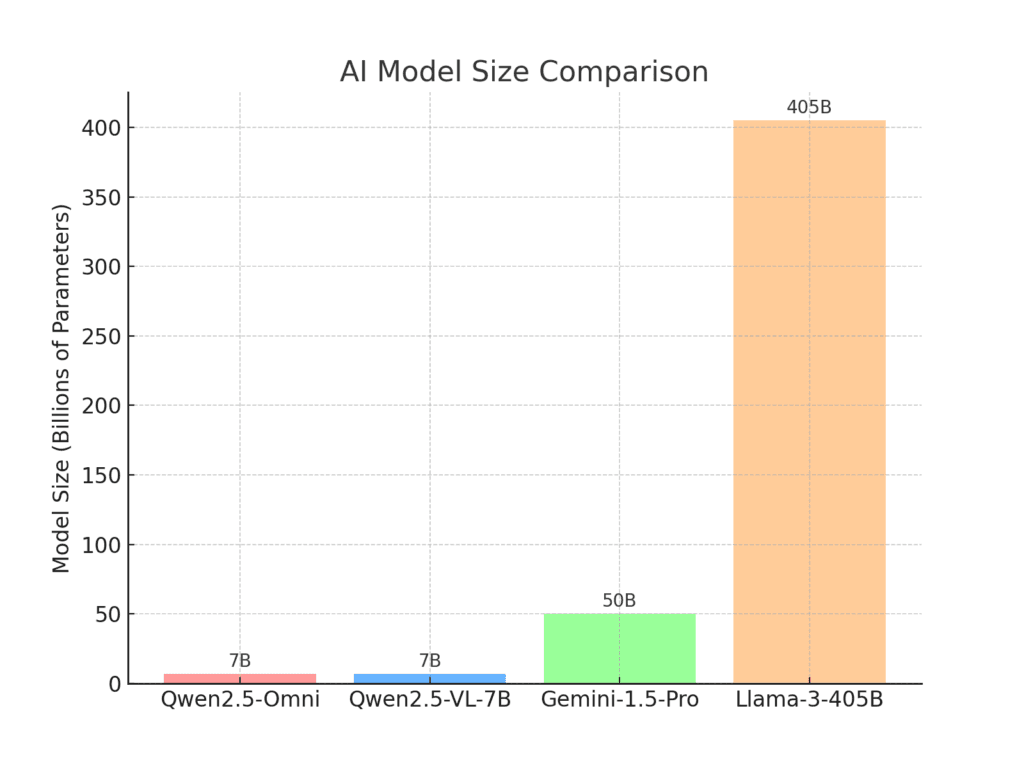

Bar Graph: Model Size Comparison

Picture a bar graph with these AI models and their sizes (in billions of parameters):

- Qwen2.5-Omni: 7 billion

- Qwen2.5-VL-7B: 7 billion

- Gemini-1.5-Pro: ~50 billion

- Llama-3-405B: 405 billion

Qwen2.5-Omni is tiny compared to giants like Llama, but it still packs a punch!

Comparing Qwen2.5-Omni to Other AIs: A Handy Table

Let’s see how Qwen2.5-Omni stacks up against some other big names. Here’s a table to make it crystal clear:

| Feature | Qwen2.5-Omni-7B | Qwen2.5-VL-7B | Gemini-1.5-Pro | Llama-3-405B |

|---|---|---|---|---|

| Text Processing | Yes (excellent) | Yes (good) | Yes (excellent) | Yes (top-tier) |

| Image Understanding | Yes (strong) | Yes (very strong) | Yes (strong) | No |

| Audio Processing | Yes (beats Qwen2-Audio) | No | Yes (good) | No |

| Speech Generation | Yes (natural, real-time) | No | No | No |

| Video Understanding | Yes (solid) | Yes (excellent) | Yes (strong) | No |

| Size (Parameters) | 7 billion | 7 billion | ~50 billion (est.) | 405 billion |

| Open-Source? | Yes | Yes | No | Yes |

| Real-Time Response | Yes (streaming) | No | Yes (somewhat) | No |

What This Tells Us?

- Qwen2.5-Omni vs. Qwen2.5-VL-7B: Omni adds audio and speech to the mix, while VL focuses on vision and text. Omni’s like an all-rounder!

- Qwen2.5-Omni vs. Gemini-1.5-Pro: Gemini’s bigger and closed-source, but Omni’s speech generation gives it an edge for real-time chats.

- Qwen2.5-Omni vs. Llama-3-405B: Llama’s a text beast, but it can’t see, hear, or talk like Omni can.

ALSO READ : OpenAI’s New Audio Models Unveiled on March 23, 2025: A Game-Changer for Voice AI

Diving Deeper: What Can Qwen2.5-Omni Do?

Now that we’ve got the basics, let’s explore what this AI can actually do. I’ll give you some real-world examples to spark your imagination!

1. Seeing Pictures and Videos

Say you show Qwen2.5-Omni a photo of a beach sunset. It can tell you, “That’s a beautiful sunset with orange and pink colors over the ocean!” Or, if you play a video of a cooking tutorial, it might say, “The chef’s chopping onions at 1:23—watch out for tears!” It’s great for apps that need to “see” the world.

2. Hearing Sounds and Speech

Imagine playing a podcast clip for it. Qwen2.5-Omni could summarize it: “The speaker’s talking about space travel—pretty cool stuff!” Or if you ask it a question out loud, like “What’s the weather like?” it’ll hear you and respond—maybe even with a voice!

3. Talking Back Naturally

This is my favorite part. Ask it, “Tell me a joke,” and it might say (in a smooth voice), “Why don’t skeletons fight each other? They don’t have the guts!” It’s perfect for voice assistants or interactive games.

4. Writing Like a Pro

Need a quick story? Tell it, “Write about a cat astronaut.” It could whip up: “Fluffy the cat floated through space, chasing laser dots on Mars.” It’s fast, creative, and super helpful for writers or students.

How Does It Perform? Benchmarks and Results

Let’s talk numbers again—because who doesn’t love proof? The Qwen team tested Qwen2.5-Omni against other models, and it shines bright. Here’s how it did on some big tests (called benchmarks):

- MMLU (General Knowledge): Scores high, matching its text skills to top models.

- GSM8K (Math Problems): Solves math like a champ, even when you ask it out loud.

- OmniBench (Multimodal Tasks): Beats others in mixing text, images, audio, and video—state-of-the-art!

- Speech Quality: Its voice sounds more natural than many competitors.

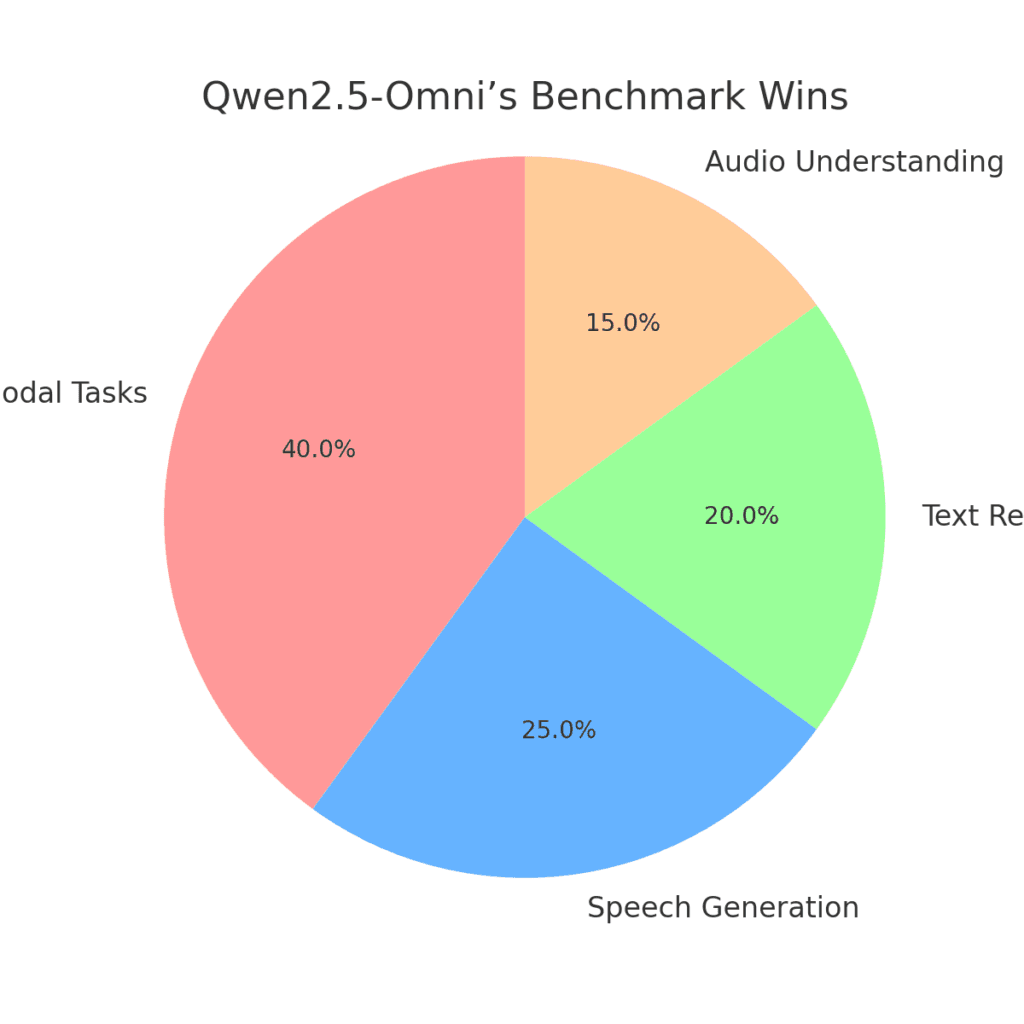

Pie Chart: Benchmark Wins

- Multimodal Tasks: 40% (biggest slice—its specialty!)

- Speech Generation: 25%

- Text Reasoning: 20%

- Audio Understanding: 15%

It’s a multimodal superstar!

ALSO READ : xAI’s Image Generation API: A Game-Changer for Developers and Businesses

Who Can Use Qwen2.5-Omni?

This AI isn’t just for tech wizards—it’s for everyone! Here’s who might love it:

- Developers: Build apps like real-time translators or smart assistants.

- Students: Get help with homework, from math to video summaries.

- Creatives: Write stories, generate voiceovers, or analyze art.

- Businesses: Create chatbots that see, hear, and talk to customers.

Since it’s open-source, you can grab it from Hugging Face or GitHub and start experimenting. Don’t know how to code? No worries—there are demos online, like Qwen Chat, where you can try it out!

The Future of Qwen2.5-Omni

What’s next? The Qwen team isn’t stopping here. They’re already planning to make it even better at following voice commands and understanding audio-visual combos (like lip-syncing in videos). Imagine an AI that could watch a movie with you and chat about it in real-time—that’s the dream!

Final Thoughts: Why I’m Excited About Qwen2.5-Omni

Hey, thanks for sticking with me through this long. Qwen2.5-Omni is one of the coolest AIs I’ve seen. It’s not just about what it can do today—it’s about where it’s taking us tomorrow. With its ability to see, hear, talk, and write, it’s like a little piece of the future in our hands.

So, what do you think? Are you ready to try it out? Let me know in the comments—I’d love to hear your ideas! And if you enjoyed this post, share it with your friends. Let’s keep exploring the amazing world of AI together!