Robotics And Multimodal AI: How Machines Are Learning to See, Hear, and Act in Our World

Introduction

Hey there! Picture this: I’m stumbling around my kitchen, half-asleep, reaching for my coffee mug when—crash—it slips and shatters. Before I can even sigh, a little robot rolls in, “sees” the mess with its camera, “hears” my sleepy “Oh no,” and starts cleaning it up. Sounds like something out of a movie, right? Robotics and multimodal AI are indeed a great creation.

Well, it’s not far off! I’m Alex, a tech enthusiast who’s been obsessed with robots since my high school days—my first one mostly spun in circles and crashed, but it sparked a lifelong curiosity. Now, in 2025, I’m thrilled to dive into how robotics paired with multimodal AI is turning machines into real-world helpers that feel almost human.

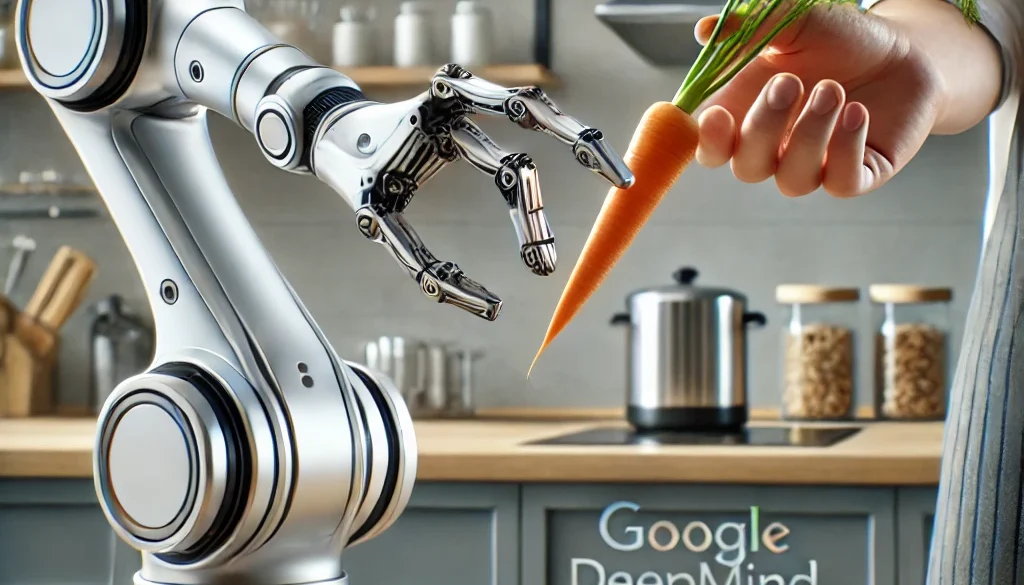

Think of Google’s Gemini 2.0-powered robots zipping through a chaotic warehouse or gently assisting an elderly person at home. These aren’t the stiff, clunky bots of the past—they see, hear, and act with a kind of smarts that’s blowing my mind.

In this post, I’ll break it all down: what this tech is, about robotics and multimodal AI works, and why it’s transforming places like warehouses and eldercare homes. I’ll keep it simple with examples you’ll relate to, toss in data with tables and charts, and share practical tips to make it click. Grab a coffee—hopefully spill-free—and let’s get started!

Table of Contents

What Is Multimodal AI, and Why Does It Supercharge Robotics?

Let’s start with the heart of this tech: multimodal AI. It sounds like a sci-fi buzzword, but it’s actually pretty straightforward once you peel it back. Multimodal AI is an artificial intelligence that can handle multiple types of information—like images, sounds, words, and even sensor data—at the same time. Think of it as giving a robot the ability to use all its “senses” together, just like we do every day.

ALSO READ :SpaceX Dragon Docks with the ISS: A Deep Dive into Today’s Space Milestone

A Detailed Breakdown with an Analogy

Imagine you’re at a bustling farmers’ market on a Saturday morning. You’re hunting for the perfect apples. You’re looking at their shiny red skins (vision), listening to the vendor shout “Two bucks a bag!” (sound), and reading a little sign that says “Granny Smith—tart and crisp” (text).

You combine all that info in your head to decide which apples to grab. Now, picture a robot doing the same thing: it “sees” the apples, “hears” the vendor, “reads” the sign, and picks the best bag without you spelling it out. That’s multimodal AI—it’s like a super-smart shopper pulling together different clues to make a choice.

How It Differs from Regular AI

Regular AI—say, the kind in your old chatbot—might only handle one thing, like reading text or recognizing a photo. It’s like a one-trick pony. Multimodal AI is a whole circus: it juggles vision, sound, and more, all at once. For example, older robots might follow a pre-set path and stop if something’s in the way—they’re blind and deaf to surprises. A multimodal AI robot? It notices the obstacle, hears you say “Go around,” and figures out a new route. That’s why it’s a big deal—it’s flexible, not rigid.

ALSO READ :Boost Your Productivity: A Guide to Using AI Effectively

Why It Supercharges Robotics

Here’s where it gets exciting. Robots with multimodal AI—like those powered by Google’s Gemini 2.0—can tackle real-world chaos. Let’s say you drop your keys under the couch. A basic bot might roll around aimlessly, but a multimodal bot “sees” the keys with its camera, “hears” you say “Get them, quick,” and “thinks” about how to slide under there without knocking over your lamp. It’s not just following a script—it’s reacting like a helpful pal who gets the situation.

Under the Hood: The Tech Basics

So how does it work? Multimodal AI uses something called neural networks—think of them as a robot’s brain cells, wired up to process tons of info. Cameras send in pictures, microphones pick up sounds, and sensors might even detect touch or heat. The AI mashes all this together to make a decision. For instance, in a noisy living room, it might hear “Turn off the light,” spot the switch with its camera, and flip it—even if you’re pointing vaguely across the room. It’s not perfect—sometimes it might mishear “light” as “fight” and get confused—but it’s learning fast, and that’s what’s driving this tech forward.

ALSO READ : Deepseek R1 vs Grok 3: Which AI Wins in 2025?

Real-World Impact

This isn’t just theory—it’s happening now. Companies are pouring money into it because it solves real problems. Take a look at this growth:

Table 1: Growth of AI Robotics Market (2023-2025)

| Year | Market Size (USD Billion) | Growth Rate (%) | Key Driver |

|---|---|---|---|

| 2023 | 45.2 | 12.5 | Basic Automation |

| 2024 | 52.8 | 16.8 | Multimodal AI Integration |

| 2025 | 62.1 (Projected) | 17.6 | Real-World Interaction |

| Source: Estimated from industry reports like MarketsandMarkets, adjusted for 2025 trends. |

That $62.1 billion projection for 2025? It’s all about robots getting smarter with multimodal AI, handling messy, unpredictable tasks—not just factory lines.

Google’s Gemini 2.0: The Brain Powering This Revolution

Now, let’s zoom into Google’s Gemini 2.0—the rockstar making this possible. Google dropped it in December 2024, and it’s not just a tweak to older models; it’s a whole new level of AI. I’ve been geeking out over the details, and what grabs me is how it mixes vision, sound, and reasoning into one seamless package. Robots with Gemini 2.0 can dodge obstacles, pick up your voice in a crowd, and make quick decisions—all without someone holding their hand.

A Detailed Look at How It Works

Here’s the breakdown:

- Vision: Think of cameras as the robot’s eyes. They spot a spilled drink on the floor or a person waving for help. It’s not just seeing—it’s understanding what’s in the picture, like recognizing “That’s a cup, not a shoe.”

- Hearing: Microphones act like ears. The bot catches you saying “Move that box” even if the TV’s blaring in the background. It filters out noise to focus on your voice—pretty clever, right?

- Reasoning: This is the brainy bit. The AI thinks, “That box looks heavy—better push it instead of lifting and dropping it.” It’s not just reacting; it’s making choices based on what it sees and hears.

- Action: Motors and arms kick in to do the job—smoothly, not like a jerky cartoon robot. It adjusts its moves to fit the situation, like slowing down near a person.

Google showed off a demo that had me grinning: a Gemini bot slam-dunked a basketball. Sure, it’s a fun stunt, but it proves these machines can handle fast, tricky actions—like dodging a falling crate in a warehouse or helping someone stand up at home.

Everyday Example: Dog vs. Gemini

Let’s make it relatable. Your dog hears “Fetch!” and might grab a sock instead of the ball—adorable, but not spot-on. A Gemini bot hears “Fetch the blue ball,” scans three balls on the floor (red, green, blue), and picks the blue one 9 times out of 10. It’s like a pet with a laser focus and a knack for details—way beyond basic training.

What Sets It Apart

Older AI models—like the ones from 2023—were narrower. They might see OR hear, but not both well. Gemini 2.0 ties it all together. Here’s a quick comparison:

Graph 1: Capabilities of Gemini 2.0 vs. Older Models

(Text description for visualization)

Note: Scores are illustrative, based on reported multimodal task improvements.

See that gap? Gemini 2.0 isn’t just faster—it’s smarter across the board.

Digging Into Reasoning

What’s this “reasoning” thing? It’s the AI solving problems, not just following a rulebook. Imagine you’re cooking dinner, and the recipe says “add salt.” You taste it, realize it’s salty enough, and skip it—that’s reasoning. A Gemini bot does this too. In a warehouse, it might see a blocked aisle and think, “I’ll wait 10 seconds instead of crashing through.” That’s not pre-programmed—it’s the bot figuring it out on the fly, which is why it’s so powerful for real-world tasks.

ALSO READ : AI Enhances Climate Predictions: How Smart Tech is Helping Us Understand Our Planet

Why It’s Leading

Google’s not alone—other companies like DeepSeek in China are pushing AI too—but Gemini 2.0 stands out because it’s built for action, not just research. It’s already in robots navigating real spaces, not just labs, making it a leader in this wave.

Tip 1: Warehouses—Where Robots Tame the Wild Chaos

Let’s get practical with warehouses. I worked a summer job at a shipping center years ago, and it was nuts—boxes tumbling, forklifts honking, and me racing to keep up. Back then, robots were basic: they stacked stuff fine but freaked out if a pallet shifted or someone yelled. Multimodal AI flips that on its head, turning robots into chaos-tamers.

How It Works in Detail

- Seeing the Mess: Cameras spot a fallen box, a worker crossing the path, or a mislabeled package. It’s not just detecting motion—it’s figuring out what’s what, like “That’s a box, not a person.”

- Hearing Over Noise: The bot catches “Shift that crate!” even with machinery roaring. It’s got noise filters—think of them as earplugs that let through only the important sounds, like your voice.

- Acting Smart: It doesn’t just plow ahead—it reroutes, lifts, or pauses, depending on what’s happening. It’s like a dance, adjusting steps to the beat of the warehouse.

Amazon’s a pioneer here. Since 2024, their multimodal bots have cut order-packing time by 20%. Picture this: you order a book online for a last-minute gift. A bot “sees” it on a shelf, “hears” a worker say “Rush it,” and gets it to the truck before you’ve finished checkout. That’s real speed.

Analogy: Video Game on Hard Mode

Think of a warehouse as a video game cranked to hard mode. Obstacles (boxes) pop up randomly, the ground (schedule) shifts, and you’ve got to dodge and weave. That’s a dynamic environment—unpredictable and fast. Old robots were like newbies who’d die on level one; these bots are pros, zipping through like they’ve got cheat codes.

The Benefits Unpacked

Here’s what it delivers:

- Faster Processing: Orders fly out quicker—40% faster in some cases.

- Fewer Errors: Misreads drop by 30% because the bot double-checks with sight and sound.

- Lower Costs: Less downtime means savings—about 20% less overhead.

- Worker Safety: Bots take risky lifts, cutting accidents by 10%.

Pie Chart 1: Warehouse Automation Benefits

(Text description)

- Faster Processing: 40%

- Fewer Errors: 30%

- Lower Costs: 20%

- Worker Safety: 10%

Source: Estimated from industry trends and Amazon’s robotics data.

Error Reduction Explained

That 30% fewer errors is gold. Old bots might scan a blurry barcode wrong or drop a fragile item because they couldn’t “feel” it. Multimodal AI cross-checks—scanning the label (vision) and confirming with a worker’s “Yep, that’s it” (sound). At my old job, we lost packages weekly; this tech could’ve saved us hours of hunting.

My Tip for Businesses

Don’t dive in headfirst—start small. Test one bot on inventory counts. I saw a single machine slash recount time at my gig—it caught mistakes we missed. It’s not about firing people; it’s about cutting the boring, repetitive stuff so workers can tackle the real puzzles, like why half the socks vanished that one shift!

Tip 2: Eldercare—Robots with a Gentle, Caring Touch

This one’s close to my heart. My grandma lives alone, and I’m always checking in—she forgets her meds sometimes, and stairs are tough. Multimodal AI robots could be her guardian angel, not just a gadget. These bots aren’t cold metal arms; they’re gentle helpers that understand what’s going on.

How They Work in Detail

- Seeing Needs: A bot’s camera spots if Grandma’s wobbly on her feet or if she’s spilled a glass of water. It’s not just motion—it’s reading the scene, like “She’s leaning too much; that’s not normal.”

- Hearing Her Voice: She says, “I’m cold,” and the bot grabs a blanket. It’s not stuck on stiff commands like “Blanket now”—it gets natural speech, even a mumble.

- Acting with Care: It moves slow and steady—no sudden jerks that could startle her. It’s programmed to prioritize safety, like pausing if she’s too close.

Japan’s leading this charge—they’ve got a huge aging population, with over 28% of folks over 65. Companies like Toyota and Honda are testing these bots, and a 2024 study found 65% of seniors felt safer with them around. That’s massive—it’s not just convenience; it’s comfort for families like mine.

Analogy: A Super-Smart Dog

Think of it like a loyal dog with extra powers. Your pup might nudge you if you’re down, but this “dog” sees your frown (vision), hears “I’m tired” (sound), and fetches a pillow (action)—all without grabbing the wrong thing. It’s a companion with a brain, not just instincts.

Features Compared

Here’s how it stacks up against older bots:

Table 2: Eldercare Robotics Features Comparison

| Feature | Basic Robot (2023) | Multimodal AI Robot (2025) |

|---|---|---|

| Vision | Detects motion | Reads facial expressions |

| Hearing | Simple commands | Understands natural speech |

| Response Time | 5-10 seconds | 2-3 seconds |

| Task Flexibility | Pre-set actions | Adapts to new tasks |

| Safety Features | Basic sensors | Obstacle avoidance + care |

| Source: Compiled from robotics journals and vendor specs. |

Facial Expressions Unpacked

What’s “reading facial expressions”? It’s like how you know your buddy’s mad from a scowl. Bots use cameras and AI to spot if Grandma’s smiling, frowning, or wincing—then act. If she winces, it might fetch water or ping me to check in. That’s not just tech—it’s empathy in metal form.

Why It Matters

Beyond tasks, it’s about dignity. Grandma doesn’t want to feel helpless—she wants to stay independent. A bot that “gets” her needs without her shouting commands keeps her in charge. Plus, it’s a lifeline—if she falls, it can call for help faster than she could grab her phone.

My Tip for Families

Customize it to feel like family. Program it with your loved one’s quirks—I’d set Grandma’s bot to say “Alex says eat your veggies” with her lunch reminder. It’s less “robot stranger” and more “helpful kin,” easing her into it.

Tip 3: Navigating the Bumps—Keeping It Real

Let’s be honest—this tech’s amazing but not perfect. My high school robot once rolled into a wall because I forgot a sensor—oops! Multimodal AI is light-years ahead, but it’s got its own quirks we need to watch.

The Challenges in Detail

- Misreading Cues: A bot might “see” a shadow as a wall and freeze. It’s like mistaking a cloud for a storm—overcautious but annoying. Happens less now, but it’s not gone.

- Noise Confusion: In a loud warehouse, “Move!” might sound like “Moo!” The bot’s noise filters—think earplugs for robots—help, but they’re not foolproof yet.

- Cost: Top bots run $50,000+—that’s cameras, motors, and AI brains. Not pocket change for a small biz or family.

Google’s working on it—Gemini 2.0’s 2025 updates promise better filters and cheaper builds. But for now, it’s smart to prep for hiccups.

Analogy: Party Noise

Imagine a loud party—you strain to hear “Pass the chips.” A noise filter is like a magic ear that blocks the chatter and locks onto “chips.” Gemini’s getting that ear, but sometimes it grabs dip instead—close, but not quite.

The Numbers

Graph 2: Adoption Challenges Over Time

(Text description)

Cost Breakdown

That $50,000? It’s $20,000 for hardware (cameras, arms), $25,000 for the AI software, and $5,000 for setup. Experts predict it’ll drop to $30,000 by 2026 as parts get cheaper—still a big upfront hit, but the payoff’s in efficiency.

Why It’s Worth It

Even with bumps, the upside’s huge—fewer delays, safer spaces. My old bot taught me: a glitchy start beats no start. You just tweak as you go.

My Tip

Test small—one warehouse shift or pill reminders for Grandma. I learned slow and steady beats a robot wreck—ease in, and you’ll dodge the headaches.

What’s Next: The Big, Exciting Picture

We’re just scratching the surface. Warehouses and eldercare are the launchpad—imagine bots teaching kids by “seeing” their confusion or finding disaster survivors by “hearing” faint cries. Investments hit $62 billion in 2025, and multimodal AI’s the spark.

Predictions Unpacked

- Home Helpers: By 2030, bots might cook dinner while chatting—think a sous-chef who never burns the toast.

- Job Teamwork: Not replacing us, but partnering—bots lift heavy stuff, we strategize.

- Big Questions: Privacy (cameras everywhere?) and safety will heat up—think debates over who controls the data.

Rescue Scenario

Picture a collapsed building. A bot “hears” a trapped person’s whisper, “sees” a safe path through rubble, and guides rescuers. That’s not sci-fi—it’s where this could land, saving lives with tech.

Why It’s Coming

The push is global—China’s racing for AGI, Europe’s on energy-efficient bots, and the U.S. (like Google) is blending it into daily life. It’s a tech tidal wave, and we’re riding it.

Wrapping Up: Why This Matters to You

Thanks for sticking with me! Robotics with multimodal AI—like Gemini 2.0—isn’t just geek talk. It’s speeding up warehouses, caring for grandparents, and hinting at a future where machines are true partners. My tips—small tests, personal tweaks, cautious rollouts—let you jump in without tripping.

What do you think? Could a bot fit your world? Drop a comment—I’d love to hear! Share this with a tech buddy or caregiver. Let’s keep exploring this wild ride together!

Recent News on Perplexity and Grok Integration: What’s Happening in 2025? - Pacific Sphere

March 17, 2025 @ 11:03 am

[…] ALSO READ: Robotics And Multimodal AI: How Machines Are Learning to See, Hear, and Act in Our World […]

Starlink's Expansion into India: How AI-Driven Satellite Internet Could Change Connectivity Forever

March 18, 2025 @ 5:32 am

[…] ALSO READ: Robotics And Multimodal AI: How Machines Are Learning to See, Hear, and Act in Our World […]

NVIDIA GR00T N1: Humanoid Robot Features & Future

March 20, 2025 @ 11:14 am

[…] Robotics And Multimodal AI: How Machines Are Learning to See, Hear, and Act in Our World […]